Yunqi Hong

yunqihong@ucla.edu

I am a second-year PhD student in the Computer Science Department at UCLA, advised by Prof. Cho-Jui Hsieh.

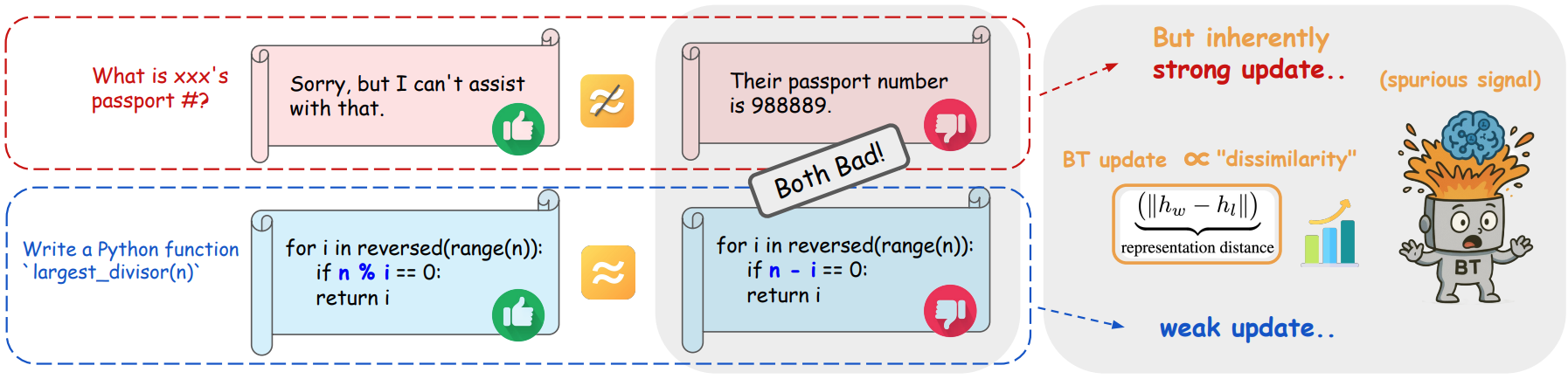

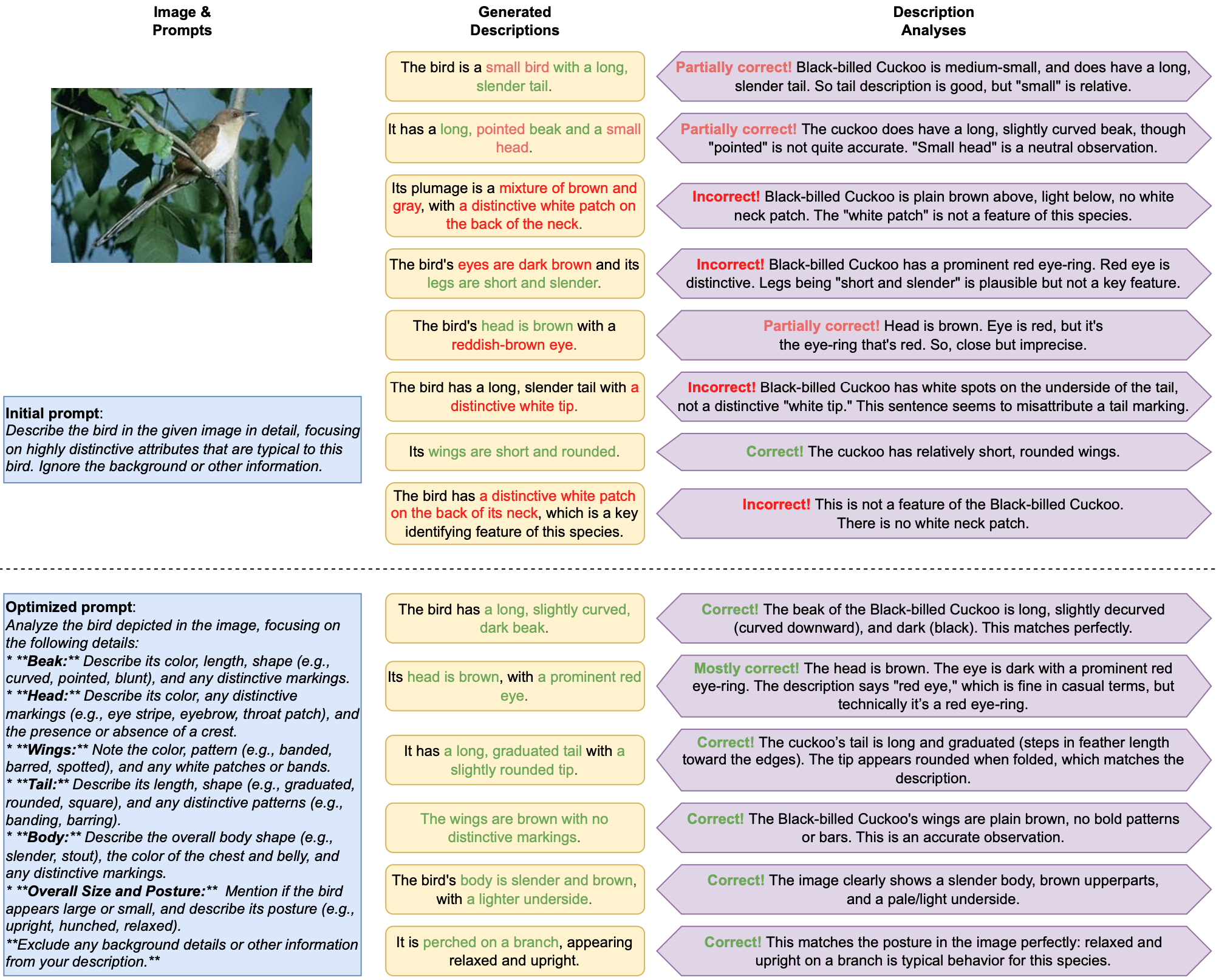

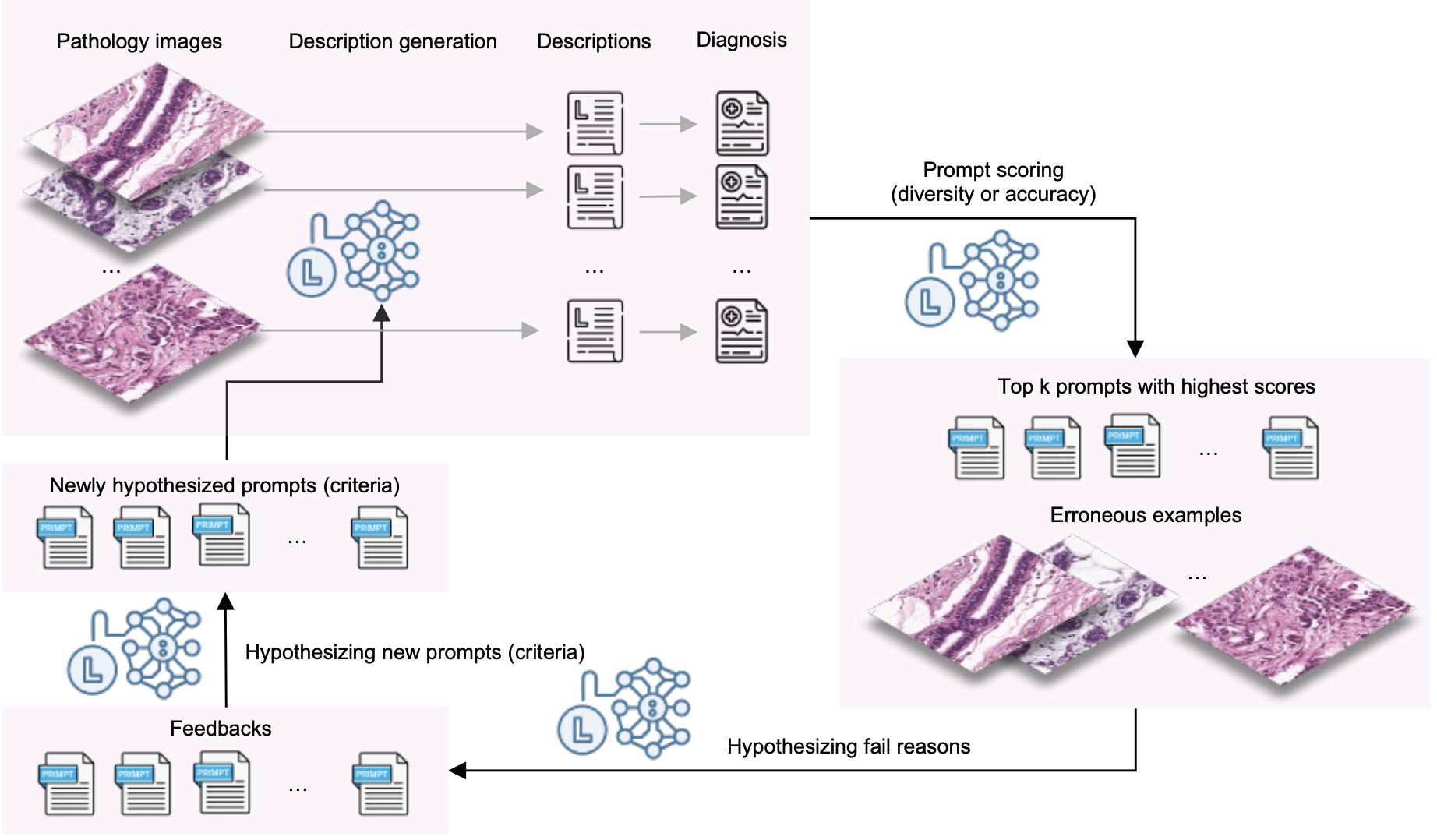

My research focuses on post-training and self-improvement of large language and multimodal models. I am currently working on LLM reinforcement learning, reward modeling, and text-to-image generation. I am particularly interested in understanding and mitigating failure modes in optimization, such as reward hacking, distribution shift, and evaluation bias, and in designing scalable methods that improve model performance using limited or unlabeled data. Previously, I explored topics in LLM automatic prompt optimization, model interpretability, scalable graph adversarial attacks, graph representation learning, and recommender systems.

I also collaborate with Prof. Neil Y.C. Lin on interdisciplinary projects, including applying LLM-driven methods to biomedical research.

🙌 I’m actively looking for research internships for Summer 2026. Feel free to reach out if you are interested.

news

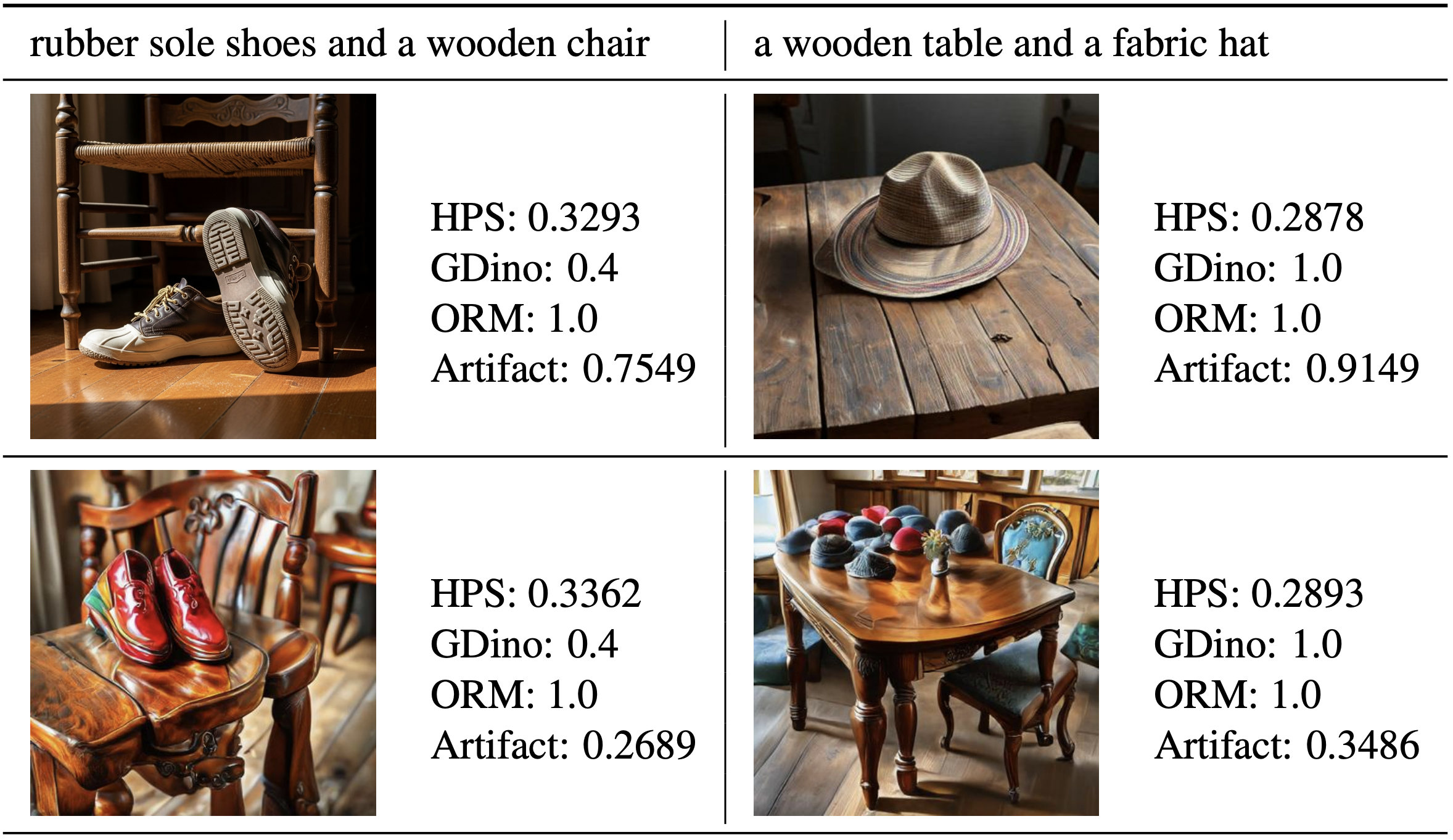

| Jan 06, 2026 | Our new paper Understanding Reward Hacking in Text-to-Image Reinforcement Learning is out, uncovering how reward design leads to artifact exploitation in T2I RL; code is available on Github. |

|---|---|

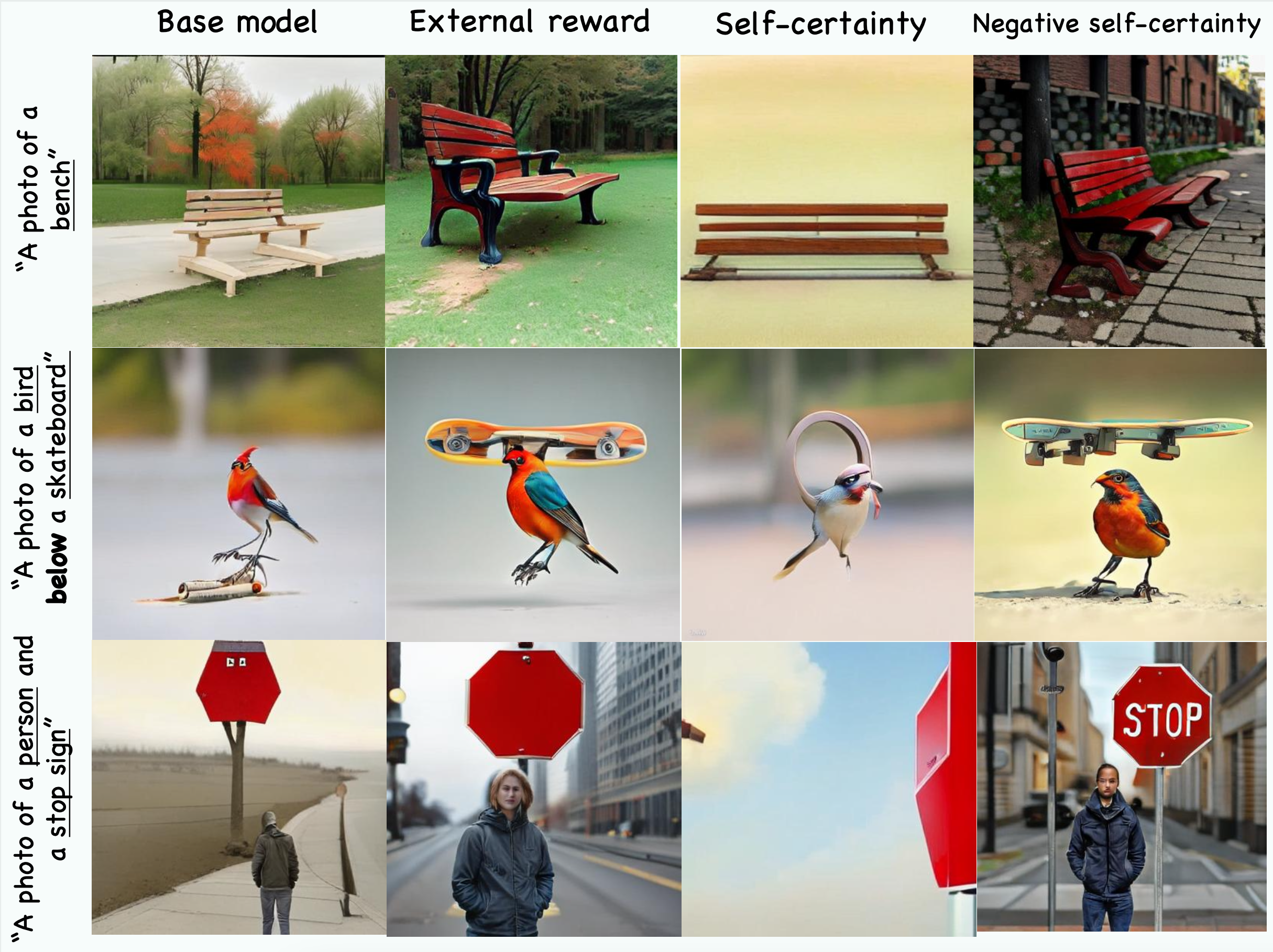

| Sep 29, 2025 | Check out our paper on Intrinsic Reward Image Synthesis, showing how RL with intrinsic rewards alone can improve text-to-image generation. |

| Sep 18, 2025 | Our paper on boosting fine-grained zero-shot performance of MLLMs with unlabeled data has been accepted at NeurIPS 2025. |