Yunqi Hong

yunqihong@ucla.edu

I am a second-year PhD student in the Computer Science Department at UCLA, advised by Prof. Cho-Jui Hsieh.

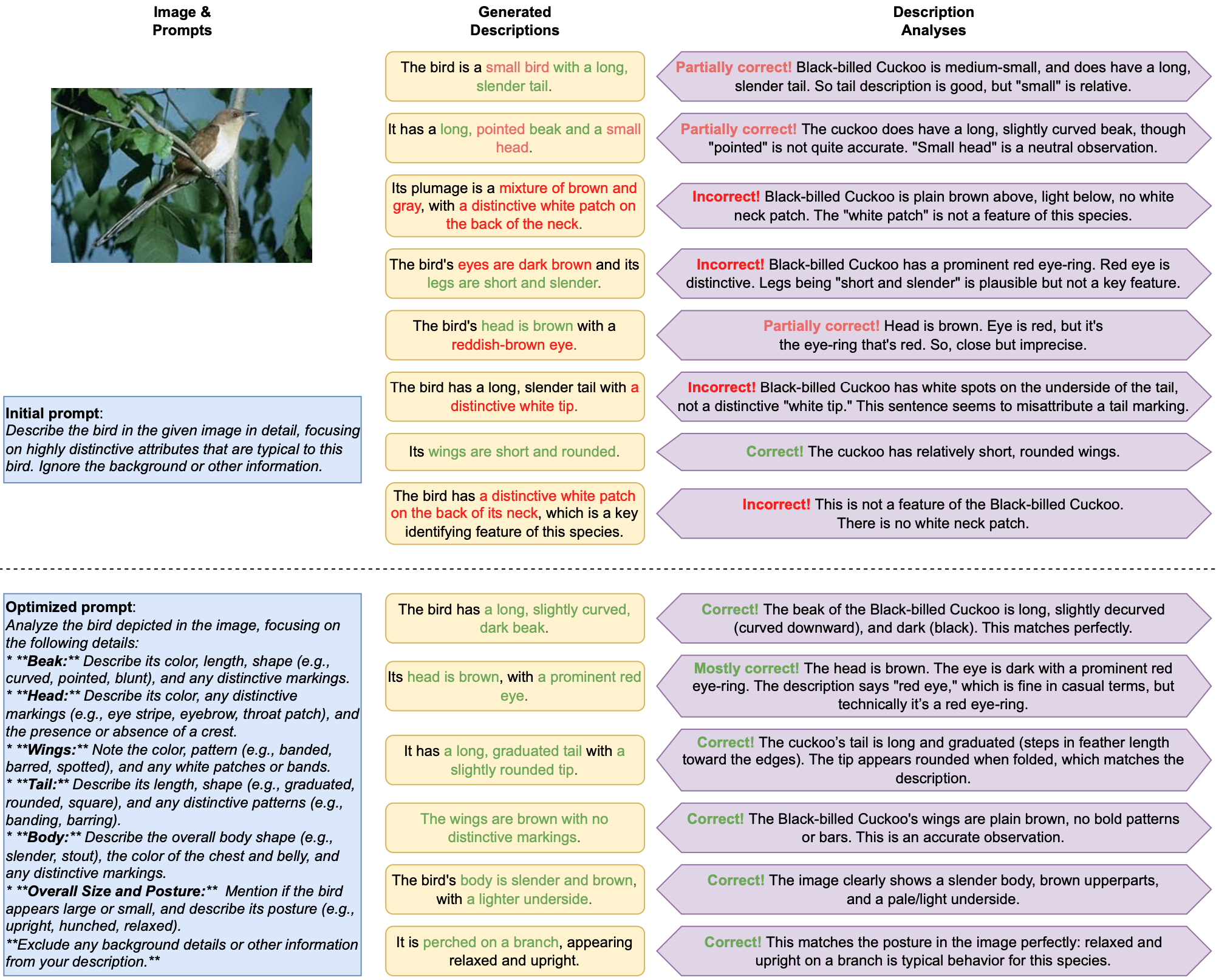

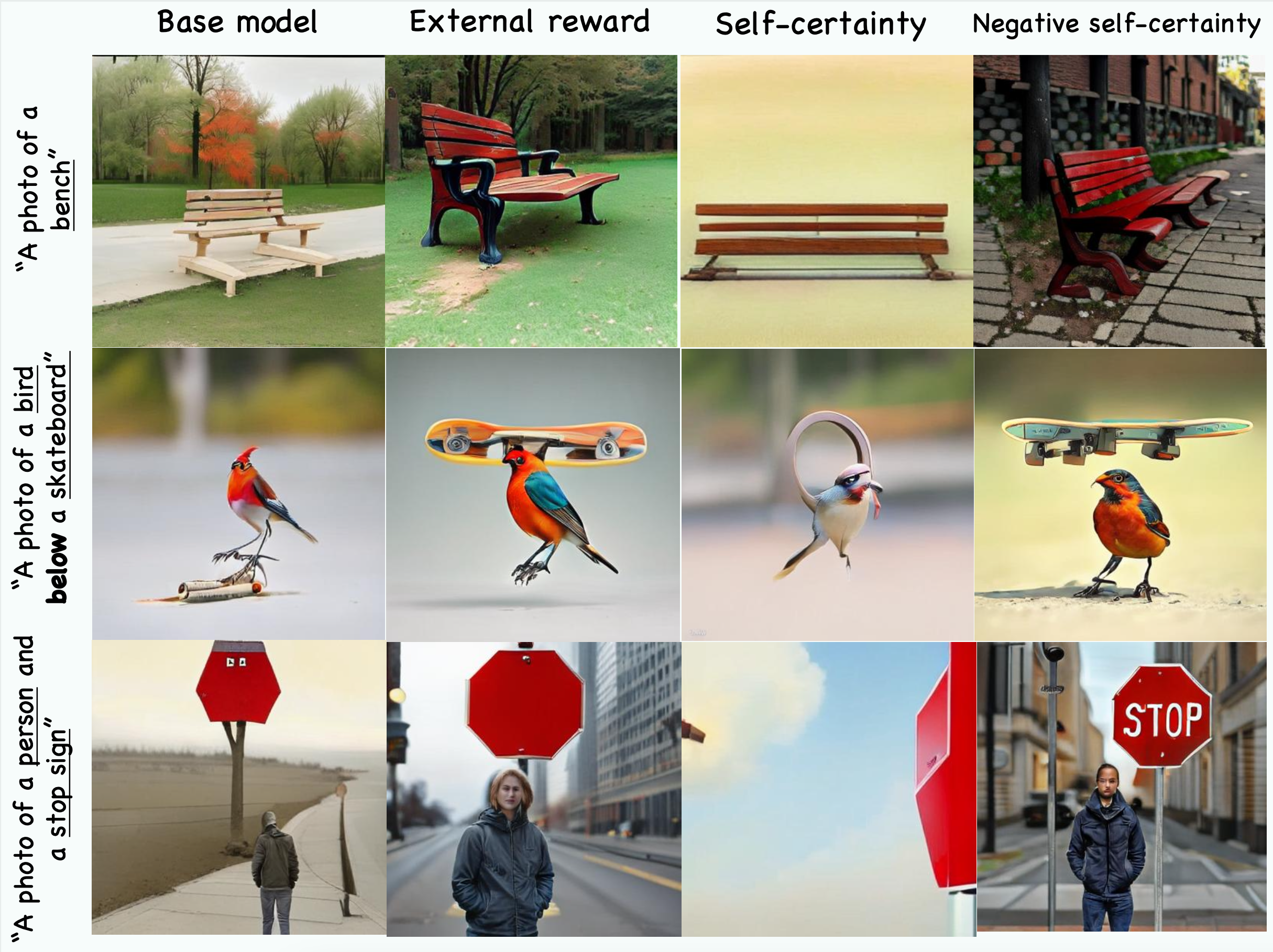

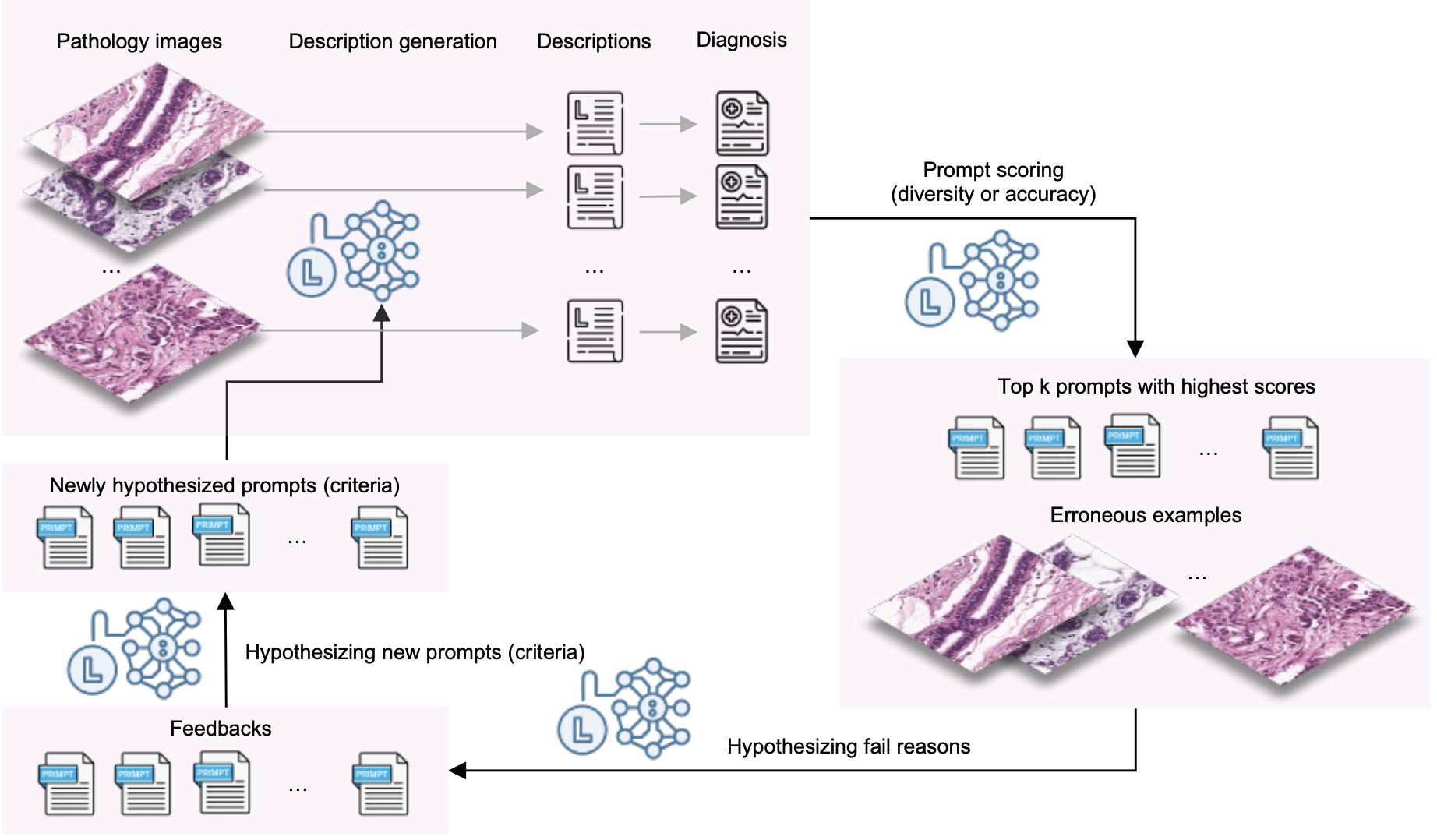

My research focuses on LLM post-training, inferencing, and downstream applications. I am currently working on LLM reinforcement learning, reward modeling, and text-to-image generation. Previously, I explored topics in LLM automatic prompt optimization, model interpretability, scalable graph adversarial attacks, graph representation learning, and recommender systems.

I also collaborate with Prof. Neil Y.C. Lin on developing LLM-driven methods for biomedical research.

🙌 I’m actively looking for research internships for Summer 2026. Feel free to reach out if you are interested.